Complete List of Cheat Sheets and Infographics for Artificial intelligence (AI), Neural Networks, Machine Learning, Deep Learning and Big Data.

- Scikit-learn is a library in Python that provides many unsupervised and supervised learning algorithms. It’s built upon some of the technology you might already be familiar with, like NumPy, pandas, and Matplotlib! As you build robust Machine Learning programs, it’s helpful to have all the sklearn commands all in one place in case you forget.

- Scikit-Learn, also known as sklearn, is Python’s premier general-purpose machine learning library. While you’ll find other packages that do better at certain tasks, Scikit-Learn’s versatility makes it the best starting place for most ML problems.

Tags: Cheat Sheet, Machine Learning, scikit-learn With the power and popularity of the scikit-learn for machine learning in Python, this library is a foundation to any practitioner's toolset. Preview its core methods with this review of predictive modelling, clustering, dimensionality reduction, feature importance, and data transformation. There are several areas of data mining and machine learning that will be covered in this cheat-sheet: Predictive Modelling. Regression and classification algorithms for supervised learning (prediction), metrics for evaluating model performance.

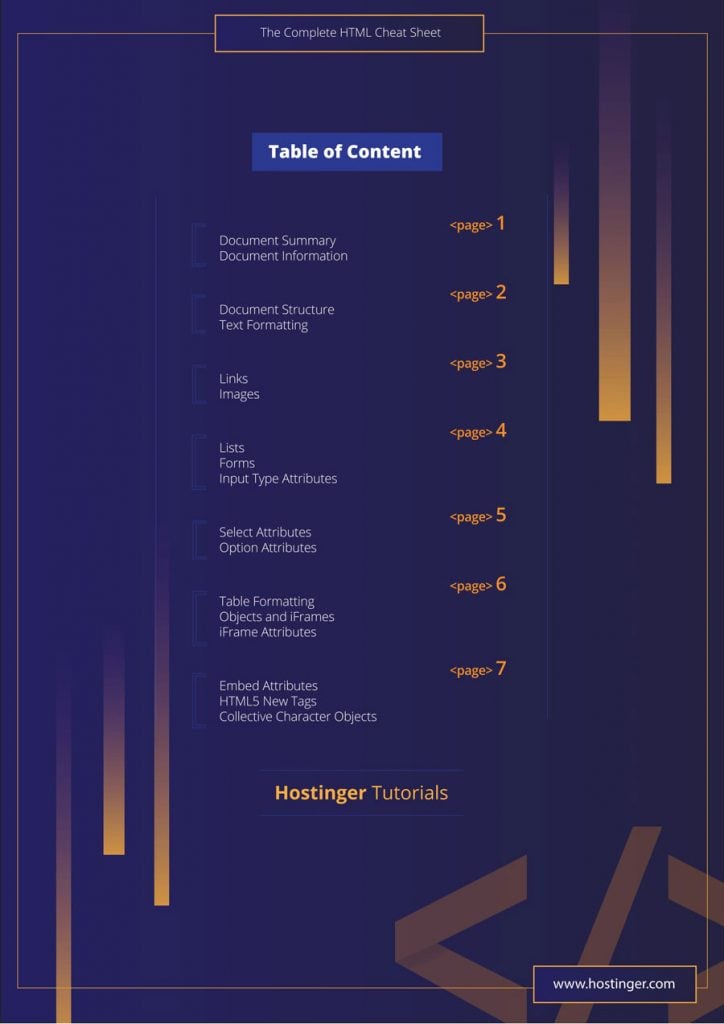

Content Summary

Neural Networks

Neural Networks Graphs

Machine Learning Overview

Machine Learning: Scikit-learn algorithm

Scikit-Learn

Machine Learning: Algorithm Cheat Sheet

Python for Data Science

TensorFlow

Keras

Numpy

Pandas

Data Wrangling

Data Wrangling with dplyr and tidyr

Scipy

Matplotlib

Data Visualization

PySpark

Big-O

Resources

Neural Networks

Artificial neural networks (ANN) or connectionist systems are computing systems vaguely inspired by the biological neural networks that constitute animal brains. The neural network itself is not an algorithm, but rather a framework for many different machine learning algorithms to work together and process complex data inputs. Such systems “learn” to perform tasks by considering examples, generally without being programmed with any task-specific rules.

Neural Networks Graphs

Graph Neural Networks (GNNs) for representation learning of graphs broadly follow a neighborhood aggregation framework, where the representation vector of a node is computed by recursively aggregating and transforming feature vectors of its neighboring nodes. Many GNN variants have been proposed and have achieved state-of-the-art results on both node and graph classification tasks.

Machine Learning Overview

Machine learning (ML) is the scientific study of algorithms and statistical models that computer systems use to progressively improve their performance on a specific task. Machine learning algorithms build a mathematical model of sample data, known as “training data”, in order to make predictions or decisions without being explicitly programmed to perform the task. Machine learning algorithms are used in the applications of email filtering, detection of network intruders, and computer vision, where it is infeasible to develop an algorithm of specific instructions for performing the task.

Machine Learning: Scikit-learn algorithm

This machine learning cheat sheet will help you find the right estimator for the job which is the most difficult part. The flowchart will help you check the documentation and rough guide of each estimator that will help you to know more about the problems and how to solve it.

Scikit-Learn

Scikit-learn (formerly scikits.learn) is a free software machine learning library for the Python programming language. It features various classification, regression and clustering algorithms including support vector machines, random forests, gradient boosting, k-means and DBSCAN, and is designed to interoperate with the Python numerical and scientific libraries NumPy and SciPy.

Machine Learning: Algorithm Cheat Sheet

This machine learning cheat sheet from Microsoft Azure will help you choose the appropriate machine learning algorithms for your predictive analytics solution. First, the cheat sheet will asks you about the data nature and then suggests the best algorithm for the job.

Python for Data Science

TensorFlow

In May 2017 Google announced the second-generation of the TPU, as well as the availability of the TPUs in Google Compute Engine. The second-generation TPUs deliver up to 180 teraflops of performance, and when organized into clusters of 64 TPUs provide up to 11.5 petaflops.

Keras

In 2017, Google’s TensorFlow team decided to support Keras in TensorFlow’s core library. Chollet explained that Keras was conceived to be an interface rather than an end-to-end machine-learning framework. It presents a higher-level, more intuitive set of abstractions that make it easy to configure neural networks regardless of the backend scientific computing library.

Numpy

NumPy targets the CPython reference implementation of Python, which is a non-optimizing bytecode interpreter. Mathematical algorithms written for this version of Python often run much slower than compiled equivalents. NumPy address the slowness problem partly by providing multidimensional arrays and functions and operators that operate efficiently on arrays, requiring rewriting some code, mostly inner loops using NumPy.

Pandas

The name ‘Pandas’ is derived from the term “panel data”, an econometrics term for multidimensional structured data sets.

Data Wrangling

The term “data wrangler” is starting to infiltrate pop culture. In the 2017 movie Kong: Skull Island, one of the characters, played by actor Marc Evan Jackson is introduced as “Steve Woodward, our data wrangler”.

Data Wrangling with dplyr and tidyr

Scipy

SciPy builds on the NumPy array object and is part of the NumPy stack which includes tools like Matplotlib, pandas and SymPy, and an expanding set of scientific computing libraries. This NumPy stack has similar users to other applications such as MATLAB, GNU Octave, and Scilab. The NumPy stack is also sometimes referred to as the SciPy stack.

Matplotlib

matplotlib is a plotting library for the Python programming language and its numerical mathematics extension NumPy. It provides an object-oriented API for embedding plots into applications using general-purpose GUI toolkits like Tkinter, wxPython, Qt, or GTK+. There is also a procedural “pylab” interface based on a state machine (like OpenGL), designed to closely resemble that of MATLAB, though its use is discouraged. SciPy makes use of matplotlib. pyplot is a matplotlib module which provides a MATLAB-like interface. matplotlib is designed to be as usable as MATLAB, with the ability to use Python, with the advantage that it is free.

Data Visualization

PySpark

Big-O

Big O notation is a mathematical notation that describes the limiting behavior of a function when the argument tends towards a particular value or infinity. It is a member of a family of notations invented by Paul Bachmann, Edmund Landau and others, collectively called Bachmann–Landau notation or asymptotic notation.

Resources

Big-O Algorithm Cheat Sheet

Bokeh Cheat Sheet

Data Science Cheat Sheet

Data Wrangling Cheat Sheet

Data Wrangling

Ggplot Cheat Sheet

Keras Cheat Sheet

Keras

Machine Learning Cheat Sheet

Machine Learning Cheat Sheet

ML Cheat Sheet

Matplotlib Cheat Sheet

Matpotlib

Neural Networks Cheat Sheet

Neural Networks Graph Cheat Sheet

Neural Networks

Numpy Cheat Sheet

NumPy

Pandas Cheat Sheet

Pandas

Pandas Cheat Sheet

Pyspark Cheat Sheet

Scikit Cheat Sheet

Scikit-learn

Scikit-learn Cheat Sheet

Scipy Cheat Sheet

SciPy

TesorFlow Cheat Sheet

Tensor Flow

Course Duck > The World’s Best Machine Learning Courses & Tutorials in 2020

Tag: Machine Learning, Deep Learning, Artificial Intelligence, Neural Networks, Big Data

Related posts:

By Andre Ye, Cofounder at Critiq, Editor & Top Writer at Medium.

Source: Pixabay.

There are several areas of data mining and machine learning that will be covered in this cheat-sheet:

- Predictive Modelling. Regression and classification algorithms for supervised learning (prediction), metrics for evaluating model performance.

- Methods to group data without a label into clusters: K-Means, selecting cluster numbers based objective metrics.

- Dimensionality Reduction. Methods to reduce the dimensionality of data and attributes of those methods: PCA and LDA.

- Feature Importance. Methods to find the most important feature in a dataset: permutation importance, SHAP values, Partial Dependence Plots.

- Data Transformation. Methods to transform the data for greater predictive power, for easier analysis, or to uncover hidden relationships and patterns: standardization, normalization, box-cox transformations.

All images were created by the author unless explicitly stated otherwise.

Predictive Modelling

Train-test-split is an important part of testing how well a model performs by training it on designated training data and testing it on designated testing data. This way, the model’s ability to generalize to new data can be measured. In sklearn, both lists, pandas DataFrames, or NumPy arrays are accepted in X and y parameters.

Training a standard supervised learning model takes the form of an import, the creation of an instance, and the fitting of the model.

sklearnclassifier models are listed below, with the branch highlighted in blue and the model name in orange.

sklearnregressor models are listed below, with the branch highlighted in blue and the model name in orange.

Evaluating model performance is done with train-test data in this form:

sklearnmetrics for classification and regression are listed below, with the most commonly used metric marked in green. Many of the grey metrics are more appropriate than the green-marked ones in certain contexts. Each has its own advantages and disadvantages, balancing priority comparisons, interpretability, and other factors.

Clustering

Before clustering, the data needs to be standardized (information for this can be found in the Data Transformation section). Clustering is the process of creating clusters based on point distances.

Source. Image free to share.

Training and creating a K-Means clustering model creates a model that can cluster and retrieve information about the clustered data.

Accessing the labels of each of the data points in the data can be done with:

Similarly, the label of each data point can be stored in a column of the data with:

Accessing the cluster label of new data can be done with the following command. The new_data can be in the form of an array, a list, or a DataFrame. Relative atomic mass of magnesium is 24 amu.

Accessing the cluster centers of each cluster is returned in the form of a two-dimensional array with:

Algorithm Cheat Sheet Pdf

To find the optimal number of clusters, use the silhouette score, which is a metric of how well a certain number of clusters fits the data. For each number of clusters within a predefined range, a K-Means clustering algorithm is trained, and its silhouette score is saved to a list (scores). data is the x that the model is trained on.

After the scores are saved to the list scores, they can be graphed out or computationally searched for to find the highest one.

Dimensionality Reduction

Dimensionality reduction is the process of expressing high-dimensional data in a reduced number of dimensions such that each one contains the most amount of information. Dimensionality reduction may be used for visualization of high-dimensional data or to speed up machine learning models by removing low-information or correlated features.

Principal Component Analysis, or PCA, is a popular method of reducing the dimensionality of data by drawing several orthogonal (perpendicular) vectors in the feature space to represent the reduced number of dimensions. The variable number represents the number of dimensions the reduced data will have. In the case of visualization, for example, it would be two dimensions.

Visual demonstration of how PCA works. Source.

Fitting the PCA Model: The .fit_transform function automatically fits the model to the data and transforms it into a reduced number of dimensions.

Explained Variance Ratio: Calling model.explained_variance_ratio_ will yield a list where each item corresponds to that dimension’s “explained variance ratio,” which essentially means the percent of the information in the original data represented by that dimension. The sum of the explained variance ratios is the total percent of information retained in the reduced dimensionality data.

PCA Feature Weights: In PCA, each newly creates feature is a linear combination of the former data’s features. Theselinear weights can be accessed with model.components_, and are a good indicator for feature importance (a higher linear weight indicates more information represented in that feature).

Linear Discriminant Analysis (LDA, not to be commonly confused with Latent Dirichlet Allocation) is another method of dimensionality reduction. The primary difference between LDA and PCA is that LDA is a supervised algorithm, meaning it takes into account both x and y. Principal Component Analysis only considers x and is hence an unsupervised algorithm.

PCA attempts to maintain the structure (variance) of the data purely based on distances between points, whereas LDA prioritizes clean separation of classes.

Feature Importance

Feature Importance is the process of finding the most important feature to a target. Through PCA, the feature that contains the most information can be found, but feature importance concerns a feature’s impact on the target. A change in an ‘important’ feature will have a large effect on the y-variable, whereas a change in an ‘unimportant’ feature will have little to no effect on the y-variable.

Permutation Importance is a method to evaluate how important a feature is. Several models are trained, each missing one column. The corresponding decrease in model accuracy as a result of the lack of data represents how important the column is to a model’s predictive power. The eli5 library is used for Permutation Importance.

In the data that this Permutation Importance model was trained on, the column lat has the largest impact on the target variable (in this case, the house price). Permutation Importance is the best feature to use when deciding which to remove (correlated or redundant features that actually confuse the model, marked by negative permutation importance values) in models for best predictive performance.

SHAP is another method of evaluating feature importance, borrowing from game theory principles in Blackjack to estimate how much value a player can contribute. Unlike permutation importance, SHapley Addative ExPlanations use a more formulaic and calculation-based method towards evaluating feature importance. SHAP requires a tree-based model (Decision Tree, Random Forest) and accommodates both regression and classification.

Scikit Algorithm Cheat Sheet Pdf

PD(P) Plots, or partial dependence plots, are a staple in data mining and analysis, showing how certain values of one feature influence a change in the target variable. Imports required include pdpbox for the dependence plots and matplotlib to display the plots.

Isolated PDPs: the following code displays the partial dependence plot, where feat_name is the feature within X that will be isolated and compared to the target variable. The second line of code saves the data, whereas the third constructs the canvas to display the plot.

The partial dependence plot shows the effect of certain values and changes in the number of square feet of living space on the price of a house. Shaded areas represent confidence intervals.

Contour PDPs: Partial dependence plots can also take the form of contour plots, which compare not one isolated variable but the relationship between two isolated variables. The two features that are to be compared are stored in a variable compared_features.

The relationship between the two features shows the corresponding price when only considering these two features. Partial dependence plots are chock-full of data analysis and findings, but be conscious of large confidence intervals.

Data Transformation

Standardizing or scaling is the process of ‘reshaping’ the data such that it contains the same information but has a mean of 0 and a variance of 1. By scaling the data, the mathematical nature of algorithms can usually handle data better.

The transformed_data is standardized and can be used for many distance-based algorithms such as Support Vector Machine and K-Nearest Neighbors. The results of algorithms that use standardized data need to be ‘de-standardized’ so they can be properly interpreted. .inverse_transform() can be used to perform the opposite of standard transforms.

Normalizing data puts it on a 0 to 1 scale, something that, similar to standardized data, makes the data mathematically easier to use for the model.

Scikit Learn Algorithm Cheat Sheet

While normalizing doesn’t transform the shape of the data as standardizing does, it restricts the boundaries of the data. Whether to normalize or standardize data depends on the algorithm and the context.

Box-cox transformations involve raising the data to various powers to transform it. Box-cox transformations can normalize data, make it more linear, or decrease the complexity. These transformations don’t only involve raising the data to powers but also fractional powers (square rooting) and logarithms.

For instance, consider data points situated along the function g(x). By applying the logarithm box-cox transformation, the data can be easily modelled with linear regression.

Created with Desmos.

sklearn automatically determines the best series of box-cox transformations to apply to the data to make it better resemble a normal distribution.

Because of the nature of box-cox transformation square-rooting, box-cox transformed data must be strictly positive (normalizing the data beforehand can take care of this). For data with negative data points as well as positive ones, set method = ‘yeo-johnson’ for a similar approach to making the data more closely resemble a bell curve.

Original. Reposted with permission.

Related: