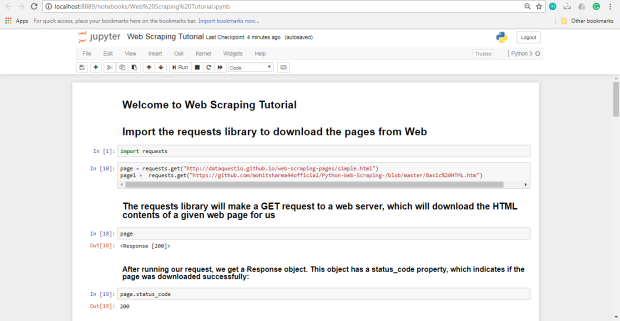

Web scraping, also called web data extraction, refers to the technique of harvesting data from a web page through leveraging the patterns in the page’s underlying code. It can be used to collect unstructured information from websites for processing and storage in a structured format. GitHub Gist: instantly share code, notes, and snippets. GitHub Gist: instantly share code, notes, and snippets. Skip to content. JayLee18 / 5 - Collecting Data Using Web Scraping.ipynb. Created Apr 23, 2021. Star 0 Fork 0; Star Code Revisions 1. What would you like to do? Embed Embed this gist in your website. Web Scraping com Python e BeautifulSoup. GitHub Gist: instantly share code, notes, and snippets.

Github C# Web Scraping

1.2 Web Scraping Can Be Ugly

Depending on what web sites you want to scrape the process can be involved and quite tedious. Many websites are very much aware that people are scraping so they offer Application Programming Interfaces (APIs) to make requests for information easier for the user and easier for the server administrators to control access. Most times the user must apply for a “key” to gain access.

For premium sites, the key costs money. Some sites like Google and Wunderground (a popular weather site) allow some number of free accesses before they start charging you. Even so the results are typically returned in XML or JSON which then requires you to parse the result to get the information you want. In the best situation there is an R package that will wrap in the parsing and will return lists or data frames.

Here is a summary:

Github Web Scraping Project

Github Web Template

First. Always try to find an R package that will access a site (e.g. New York Times, Wunderground, PubMed). These packages (e.g. omdbapi, easyPubMed, RBitCoin, rtimes) provide a programmatic search interface and return data frames with little to no effort on your part.

If no package exists then hopefully there is an API that allows you to query the website and get results back in JSON or XML. I prefer JSON because it’s “easier” and the packages for parsing JSON return lists which are native data structures to R. So you can easily turn results into data frames. You will ususally use the rvest package in conjunction with XML, and the RSJONIO packages.

If the Web site doesn’t have an API then you will need to scrape text. This isn’t hard but it is tedious. You will need to use rvest to parse HMTL elements. If you want to parse mutliple pages then you will need to use rvest to move to the other pages and possibly fill out forms. Minecraft lighthouse. If there is a lot of Javascript then you might need to use RSelenium to programmatically manage the web page.